AI Literacy Requirements Under the EU AI Act

The AI Act requires that employees possess AI literacy skills. In this article, you’ll get an overview of how to structure the training and meet the requirements in practice.

Table of Contents

AI literacy

The purpose of the EU’s AI Act is to make sure that artificial intelligence (AI) is used safely, transparently and responsibly. It aims to reduce the risk of errors, misuse and discrimination.

That’s why the AI Act requires employees who use AI to have a basic understanding of how it works and how it affects their job.

More specifically, Article 4 of the AI Act stipulates that employees must be trained in what’s called AI literacy. This means understanding how AI systems work and being aware of the challenges involved in using them.

This rule takes effect on 2 February 2025.

Where do you use AI systems?

AI is used in most workplaces and often without people noticing because it’s built into the systems employees use every day. Examples include customer service chatbots, writing and proofreading tools and data analysis software.

This means that AI is quietly shaping the workday, especially in terms of how employees make decisions about customers, colleagues or citizens using these tools.

That's why your colleagues need AI literacy to understand how the technology works.

Examples of AI systems

You might recognise some of these AI systems from your own work – they all make use of artificial intelligence:

Microsoft Copilot is an AI system that helps write documents in Word, create presentations in PowerPoint and analyse data in Excel.

OpenAI’s ChatGPT provides an AI system that generates text, ideas and images and also includes an API that can be integrated into other platforms.

HubSpot uses AI to automatically target and customise marketing, analyse customer interactions and improve the sales process.

Salesforce applies AI to automate sales forecasting, manage customer queries and recommend ways to grow the customer base.

Each of these tools requires specific AI literacy knowledge for safe and compliant use.

What are the requirements of AI literacy?

The AI Act requires organisations that are classified as either a ‘provider’ or ‘deployer’ of AI systems to ensure that everyone involved in running or using those systems has appropriate AI skills.

This means they need technical knowledge, hands-on experience and training in how to use AI. They also need to understand the context in which the AI system is used, who it is used on and the risks involved.

So, if your organisation uses AI, this requirement applies to you. You must ensure relevant employees receive proper AI literacy training to become AI literate.

Essential AI Literacy Skills for Employees

Start by deciding who will take responsibility for managing the AI training and communication in your organisation. This could be your compliance officer, GDPR manager, HR manager or the person who happens to know the most about AI and how it’s used in your company.

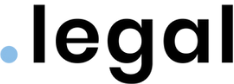

That person should then identify which of your systems use AI. To do this, they can use your GDPR compliance documentation as a starting point by taking a look at the information assets you have already registered. Once the systems have been identified, they can see what the AI is used for and which employees use those systems in their daily work.

This gives you the basis for designing training programmes that fit into employees’ workday using AI systems. The training should be tailored to the specific audience and include examples they recognise from their own tasks.

The training programme should build understanding of why it’s important to have basic knowledge of AI use. Show employees how this knowledge helps them do their jobs better and more safely – for themselves, their colleagues and the people they work with.

Finally, make sure to document the training: who completed it, when and how it was delivered.

Also remember to update the training programme whenever new AI systems are introduced or existing ones are significantly changed. And of course, new employees must also go through the training as part of their onboarding.

What skills should be included in an AI literacy programme?

If you’re unsure where to start with your internal AI literacy training programme, here is a suggestion to get you going:

Suggested AI literacy training programme

- The basics of artificial intelligence

-

What are machine learning, neural networks and large language models (LLMs)?

-

What are the differences between the various technologies?

-

Where is AI typically used (e.g. finance, energy, public sector)?

-

- Introduction to the AI Act

-

Teach the AI literacy requirement and what it means for employees

-

Explanation of prohibited AI practices, high-risk AI systems, limited-risk systems and minimal or no-risk systems

-

Examples of legal requirements like transparency, traceability and documentation

-

- Responsible use of AI

-

What is bias and how does it occur in AI?

-

Introduction to data protection and individuals’ fundamental rights (GDPR)

-

- Your organisation’s internal guidelines

-

Walkthrough of relevant internal policies and procedures for using AI

-

Practical case study based on how AI is used in your organisation

-

- Targeted training for specific employee groups

-

Tailor training to different roles, such as: 1) Developers and technical staff; 2) Legal and compliance teams; 3) Management; 4) Customer service and support staff.

-

How do organisations carry out their AI literacy training?

Plenty of companies are already meeting the requirement to train their staff in AI literacy through internal programmes.

IBM offers an internal learning platform that uses AI itself to recommend relevant courses based on an employee’s role. For example, someone in HR might be offered a course on AI ethics, while a software developer is led towards more technical AI training.

Workday is a provider of HR software that supports AI skills through its annual awareness training. Developers working with machine learning and AI receive additional, specialised training. All employees are also trained on the company’s general policies and procedures, which include the responsible use of AI.

Telefónica has integrated AI training into its existing awareness and learning programmes. They have also appointed ‘super users’ in different departments. These super users are employees trained to act as local experts in the responsible use of AI. These super users serve as internal points of contact that colleagues can turn to for help with AI systems, risk assessments and legal considerations. This approach ensures that AI knowledge is available right where the technology is being used.

Summary

Most organisations already use AI systems, which makes employee training essential. However, the AI Act isn’t the only EU regulation that requires your organisation to train its employees. So, it would be smart to align your AI literacy requirements and coordinate AI literacy training with GDPR and NIS2 requirements as well..

What specific AI literacy skills are required under the EU AI Act?

AI literacy skills include understanding how AI systems work, recognizing AI limitations and biases, knowing your organization's AI policies, and applying responsible AI practices. Employees need practical knowledge about the AI tools they use daily and awareness of regulations on AI that affect their work.

How do I implement AI literacy training programmes in my organization?

Start by mapping existing AI systems using your compliance documentation, assign responsibility to a compliance officer or manager, develop role-specific AI literacy content, and document all training activities. The AI literacy programme should align with your AI Act EU compliance requirements and be updated when new AI systems are introduced.

What's the difference between AI literacy and general AI training?

AI literacy focuses specifically on compliance requirements under AI rules and regulations. While general AI training might cover technical skills, AI literacy emphasizes understanding risks, recognizing bias, protecting individual rights, and following your organization's responsible AI policies as required by the AI Act.

Can I use existing compliance training for AI literacy requirements?

Yes, AI literacy training can be integrated with existing GDPR, NIS2, and information security programmes. However, it must specifically address AI Act requirements, including employee understanding of AI systems, risk awareness, and compliance with regulations on AI.

Learn more about integrated compliance frameworks

.jpeg)

.jpg)

.jpg)

.jpg)

-1.png)

.jpeg)

.jpg)

Info

.legal A/S

hello@dotlegal.com

+45 7027 0127

VAT-no: DK40888888

Support

support@dotlegal.com

+45 7027 0127

Need help?

Let me help you get started

+45 7027 0127 and I'll get you started

.legal is not a law firm and is therefore not under the supervision of the Bar Council.